For AI Productivity Gains, Let Team Leaders Write the Rules

Centralized AI efforts have good intentions — but it’s time to empower team leaders in the trenches to make local implementation decisions.

News

- In 5 Years, Everyone Will Have an AI Companion, Says Mustafa Suleyman

- Talent Boomerang: Mira Murati’s Thinking Machines Loses Two Co-Founders to OpenAI

- YouTube Allows Parents to Limit Kids’ Shorts Viewing Time

- What Scott Adams' Dilbert Got Right About Power at Work

- Salesforce Rolls Out Slackbot as an AI Agent

- Zoho Launches First Middle East Data Centers in UAE

Carolyn Geason-Beissel/MIT SMR | Getty Images

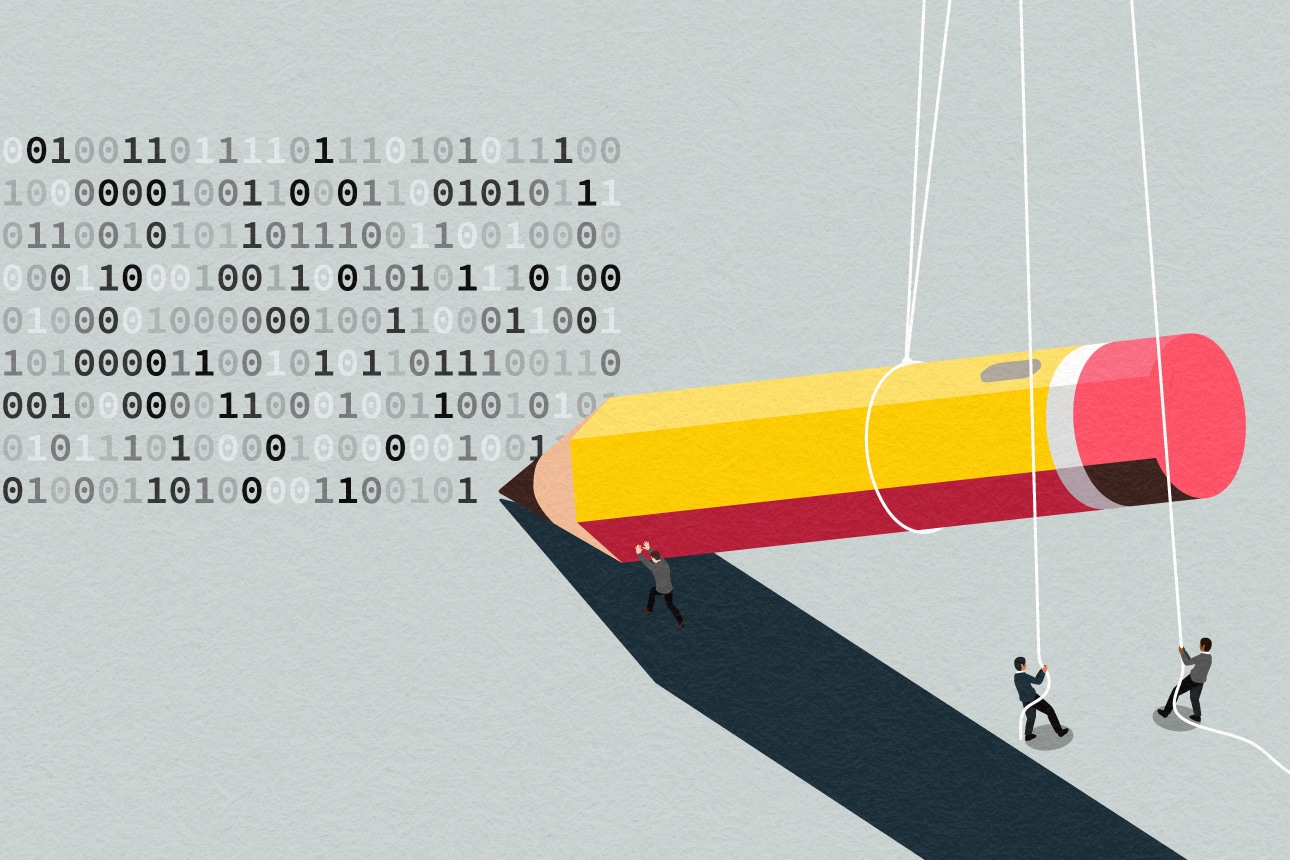

Corporations are rushing to invest in artificial intelligence. But corporatewide AI policies alone can’t transform work, according to our recent research. For AI efforts to pay off, executives must set clear guardrails while enabling teams to write the rules that make tool adoption real. Only then will AI deliver the meaningful returns that organizational leaders are pursuing.

In the productivity classes one of us (Robert) teaches at MIT, many executives are keen to learn about AI but unsure about how current corporate rules would let them use the new technology. This led to the design of a survey, taken by 348 business professionals — including senior executives, line managers, and team members from various industries — about who gets to make AI rules and how AI rules actually function inside their companies. The findings are clear-cut: Businesses have been overlooking the team dimensions of AI governance and implementation.

A striking 72% of the participants in the survey said that corporate headquarters should establish overall AI guidelines, but individual teams must set their own rules within those boundaries.

The Trouble With Centralization

Some organizations have responded to the AI craze by creating AI czars or centers of excellence, issuing corporate mandates, or establishing executive task forces. While these moves may feel decisive, they seldom change how real work gets done.

Centralization tends to clog the arteries. Teams wait for approval, workarounds proliferate, and some employees play it safe by circumventing AI productivity tools instead of harnessing them.

We’ve seen the challenges of new productivity tools before. However, the arrival of the internet didn’t lead to “internet departments,” and no one ever asked permission to open a spreadsheet. AI should be governed the same way: with principled guardrails at the corporate level and practical rules created at the team level.

AI tools have the capability to write, summarize, and analyze with speed. But AI cannot grasp context, apply judgment, or eliminate error. Responsibilities vary greatly by function, of course: The finance department is different from customer service or site operations. Because judgment is local, rules must be local and set by team leaders.

Corporate Intent Versus Team Realities

From a productivity standpoint, AI will not deliver the results executives are looking for until team leaders — the people closest to daily tasks and risks — translate broad corporate principles into actionable ground rules for work.

The survey of business professionals exposes a wide gap between corporate intent and team reality. Fewer than half the respondents (47%) said corporate AI policies reflect the realities of their work. Thirty-one percent were neutral, and 22% disagreed outright.

The result of such gaps: Teams are sometimes passing up the productivity gains from AI or using AI in unapproved ways. Employees who consider regulations to be detached from their daily work are more likely to go around official channels, placing organizations at greater risk. That’s the opposite of what corporate AI governance is supposed to achieve.

The responses to the survey underscore what most managers instinctively recognize: Productivity happens primarily at the team level. Although the C-suite may try to establish AI policies for the whole business, front-line managers are the ones turning policy into practice. It’s team leaders who will dictate how AI will be used, how quality will be maintained, and how time saved will be reinvested.

Responsible AI Scaling

That understanding is why 63% of those surveyed agreed or strongly agreed that rules established by teams enable AI adoption and the responsible use of AI. Delegating AI implementation to team leaders is not a gamble — it’s the precondition to scaling AI productively and responsibly.

Consider how this plays out by function. Marketing might use AI to compose campaign headlines, for instance, but managers must ensure that AI-generated content and edits preserve brand voice. Engineering can use AI to conduct unit tests and generate boilerplate code, but managers must exercise extra vigilance to maintain core system functionality. Legal can let AI suggest drafts of contracts, but managers must insist on human review before sending them out to vendors or clients.

Fewer than half the respondents (47%) said corporate AI policies reflect the realities of their work.

Decentralization is not abdication. Corporate leaders still have important roles to play. They must establish guardrails around privacy, security, intellectual property, brand, and ethics. They must build secure, authorized platforms for generative AI and provide employees with training on the new tools. And they must have a single, integrated scorecard of metrics so progress can be measured and aligned with corporate budgets.

However, AI governance to date has focused too much on corporate entities — chief AI officers, task forces, and central compliance committees. What seems to be lacking is an understanding of how governance operates where work is actually accomplished: in teams. Forward-looking companies should give considerable responsibility to the leaders of each team.

As the survey demonstrates, team leaders are the missing link. Without their commitment to AI adoption, companies will continue spending billions of dollars on the new technology, with minimal returns. The message is simple: To deliver significant productivity gains from AI, companies must allow team leaders to design the implementation of AI within broad corporate guidelines.