Leadership Development Is Failing Us. Here’s How to Fix It

Executive development programs are big business, but too many fail to yield meaningful results. Here’s how to be a savvy consumer.

News

- Deloitte and AWS Forge $1 Billion Alliance to Accelerate Digital Transformation in the Middle East

- Over 40% of Agentic AI Projects Expected to Fail by 2027, Report Finds

- Guardian AI Agents Poised to Take 15% of Agentic AI Market by 2030, Gartner Says

- Deloitte Rolls Out Global Agentic Network to Accelerate AI Adoption in the Middle East

- AI Research Forum and Summit Focused on Agentic AI Announced

- Cisco's New Quantum Entanglement Chip Aims to Accelerate Distributed Computing

Daniel Hertzberg/theispot.com

Helping managers and high-potential contributors develop better leadership skills can be a critical part of building organizational capabilities — but for many companies, leadership development programs are falling far short. Those responsible for selecting such programs often struggle to show how their spending has produced significant, enduring changes in participants’ individual capacities or collective outcomes, yet operating executives continue to fund these efforts without requiring such accountability.1 The result: a massive leadership development industry in which few distinguish between snake oil and effective healing potions.

Our review of leadership development programs (LDPs) at several dozen business schools around the world illustrates the typical shortcomings.2 Few program directors we surveyed could identify how the design and evaluation of their leadership development offerings consistently meet scientific standards of desired impact. Instead of documenting improvement in participants’ capabilities, for example, the majority (70%) said they settle for positive reactions to the program or evidence of knowledge gained, at least in the short term (63%). None linked their programming to changes in participants’ career trajectories, followers’ attitudes or performance, or team- or organization-level outcomes.

Similarly, our recent interviews with 46 HR executives revealed that their selection of LDPs is seldom guided strongly by evidence of effectiveness. Rather, most acknowledged that they have made such decisions with limited information, often investing significant sums based on things like the “looks” of a program (such as a well-designed website) or the charisma of the faculty. As one executive noted, leadership development decisions seem to resemble the online dating industry, where swiping left or right is based more on appearance than substance.

This problem is not inevitable. From our years of studying leadership development, developing and delivering programs, and working with chief human resources officers and chief learning officers at organizations such as Ericsson, Microsoft, Philips, the Red Cross, and Siemens, we have arrived at a simple premise: Good leadership development requires attention to three core elements — a program’s vision, method, and impact — and the integration between them.

Just a few good questions about a program, and careful parsing of the answers, can clarify how well each of these elements is designed and aligns with the others — and lead to significantly better decisions. This article will explain what makes for a quality program and outline the questions leaders and program developers should be asking to determine an LDP’s effectiveness.

A Solid Program Stands on Three Legs

A leadership development program should be fundamentally about the growth of participants. An effective one will increase participants’ knowledge, skills, or abilities. Any evaluation of an LDP should start with its vision: what capabilities or qualities the program aims to develop in participants, why this is important, and how it will address specific developmental needs.

A good program will have very specific goals — for example, that participants will develop their leadership identity, or improve their emotional intelligence or their ability to think critically under stress. And it will clearly specify the foundational thinking or evidence on which it is built. If a program developer can’t tell you what their specific vision is, why it’s a fit with your own organization’s needs and goals, and the principles it’s based on, there’s little reason to think you’re going to get meaningful results.

There’s also little reason to think you’ll reach a desired destination if you don’t have an effective plan for getting there. This is why the second key dimension to evaluate is the method of a leadership development program. This consists of curriculum (for example, participants will study illustrative cases and be taught effective techniques for strategic analysis) and teaching/learning methods (participants will discuss and debate, engage in experiential exercises, and build their own models, for instance).

A good leadership development program will have specific goals and clearly specify the foundational thinking or evidence on which it is built.

The third component on which an LDP should be evaluated is its impact — the expected outcomes for participants and other stakeholders. Programs worth choosing can articulate how the participants will change. This is ultimately about a program’s ability to clearly explain its expected results, including how those results are assessed.

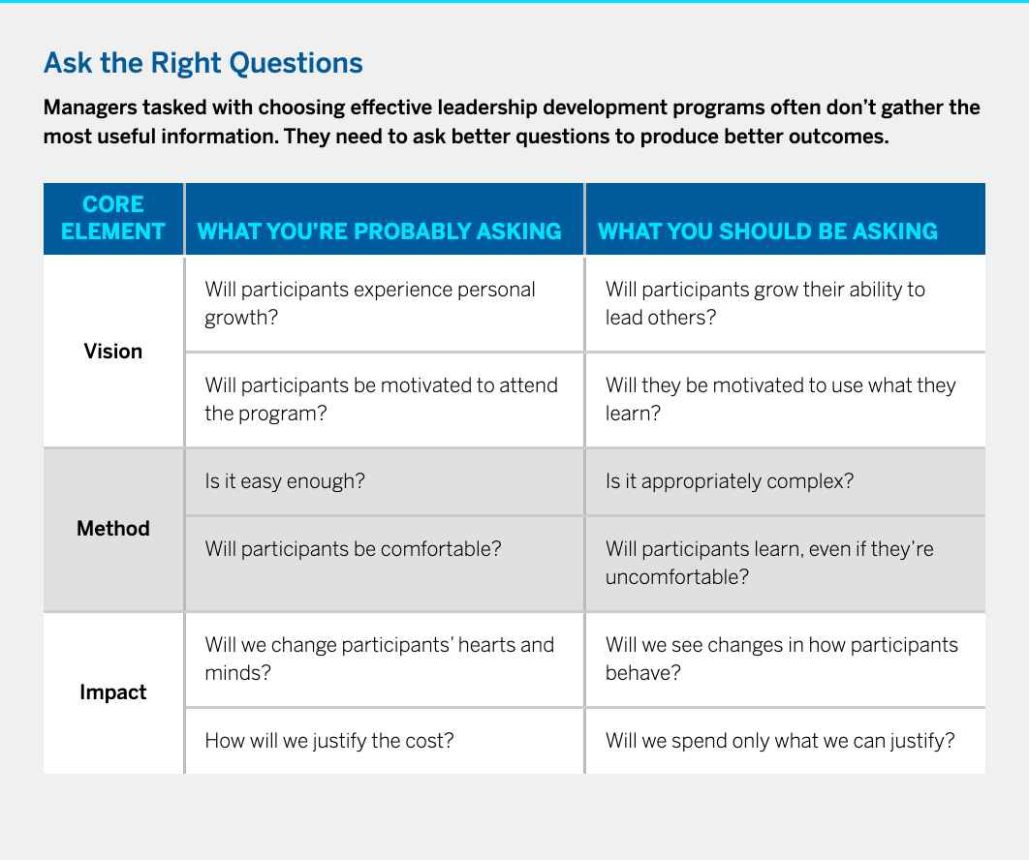

Our experience suggests that most program buyers ask at least implicit questions about the vision, method, and impact of the LDPs they are considering. However, we assert that they often ask the wrong questions or are too readily satisfied with unsatisfactory responses when they do ask better questions. (See “Ask the Right Questions.”) Constrained by budget, time, or power to make operating changes, they’re understandably drawn to programs that promise simplicity, novelty, and comfort for, and high ratings from, participants.

Equally problematic is frequent misalignment among a program’s components. Combining vision, method, and impact into an integrated, consistent whole is required to produce meaningful results. (See “One Company’s Approach to Improving Leadership Development.”) But this alignment is missing from many programs. Consider a program that promises to produce “leaders with superior emotional intelligence skills” that first assesses participants’ current capacities and then provides ideas for how to improve. The components are misaligned because the method is poorly suited to the vision of behavioral skill development. A stronger offering would have participants iteratively attempt to assess and manage their own and others’ emotions in real time, with guided coaching and correction provided along the way. Similarly, asking participants whether they enjoyed an emotional intelligence program or believe they’ve improved fails to assess impact in a way that’s aligned with the vision to improve skills. A more effective assessment would use multisource ratings to document emotional intelligence behavioral capacities before and after the program.

The Right Questions to Ask About LDPs

If the problems with leadership development program effectiveness stem from asking the wrong questions and accepting poor answers, then the solutions begin with asking better questions. Below, we suggest six alternatives to common queries. Sharper inquiry should help program buyers gain a better sense of the overall coherence of a program’s vision, method, and impact.

Instead of asking “Will participants experience personal growth?” ask “Will participants grow their ability to lead others?” Practicing yoga or tai chi. Journaling. Exercising and eating well. Meditating. Career planning. Reducing clutter. The opportunities to grow are nearly endless. As individuals, many of us have invested resources in learning about the activities above and would claim to have grown from doing so. But it’s not clear that our capacities to lead others toward a shared objective improved as a result.

So why are these and so many other similar activities often included in leadership development? Savvy purveyors know it’s a lot easier to get an organization to sponsor something supposedly related to leadership than to call it what it is: worthwhile human development that may or may not have any relation to the key behaviors required to lead others successfully. The problem is that associating too many things with leadership development often makes it become less, not more, respected. And this overly broad labeling helps explain why organizations might fund these kinds of learning experiences when times are good — understanding that they might contribute to broader objectives like personal well-being and longer-term growth in leadership capacity — but quickly cut what seem like fun perks when budgets get tight. One of us previously taught at a university where the leadership skills programming included things like learning golf and dining etiquette. Not surprisingly, most of the faculty pointed to courses like these when arguing that leadership development is an unscientific endeavor unworthy of a serious educational institution.

That’s why it’s important to keep the program’s vision front and center. How is the proposed program related to the ability to lead others effectively, and over what time frame? There needs to be an explanation of how this learning directly contributes to key aspects of leading. This isn’t to say that nothing outside of nuts-and-bolts skills training (such as setting goals and motivating others) can be linked to leading successfully, but the links need to be explicitly made.

Research on effective leadership development points to the importance of linking what participants will learn to a needs analysis — an assessment of gaps between organizational objectives or role requirements and a learner’s current capabilities.3 Some LDP providers, such as the consulting firm Mercuri Urval, refuse to work with organizations that won’t start with a needs analysis that establishes the leadership challenges connected to company context and strategy, because it’s key to demonstrating an LDP’s ROI and impact.

Instead of asking “Will they be motivated to attend?” ask “Will they be motivated to use what they learn?” Most of us aren’t motivated to go to medical appointments because we find them enjoyable; we go only if we think they will help keep us healthy or restore us to health. That is, we’re motivated not by the experience itself but by the utility of the advice and treatment we receive.

We believe that the same logic should apply to choosing an LDP, but we’ve seen that programs are often selected more for their entertainment value than their usefulness. Programs should be selected based on expectations for what participants will be able to do as a result of having attended the program, not based on features designed primarily to entice them to attend.

Of course, motivation to attend is relevant — but it isn’t the same as motivation to subsequently use what has been learned, that is, motivation to transfer.4 That comes from programming that is sufficiently relevant to the goals or problems of participants to be purposefully recalled and applied in subsequent situations.5 This is why connecting learning and development directly to on-the-job experiences can be so powerful, though it is much more challenging than classroom learning.6

The lesson for leadership development initiatives, in a nutshell: Select programs based on the evidence about what participants will be able and motivated to do after attending, not how excited they’ll be to show up. Tell participants how the program will be useful for addressing the things they care about, and make sure the program then does so explicitly. When participants, like patients, believe that the treatment they’ve been prescribed will reduce their distress or promote their health, they’ll be motivated to stay engaged.

Instead of asking “Is it easy enough?” ask “Is it appropriately complex?” When you get on a plane, do you hope the pilot’s training was easy and convenient? Of course not. You hope that it’s been sufficiently rigorous and appropriately complex so that the pilot knows what to do when they hit unexpected weather or experience equipment malfunctions in flight. You hope the same is true for the air traffic controllers overseeing planes’ flight paths. You want their training to have been designed to prepare them to skillfully use what they’ve learned, not to maximize some other objectives. In contrast, all too often, leadership development programs are designed to maximize convenience for participants or to minimize interference with work deemed more important.

The science of effective learning has clearly shown that learning is deeper and more durable when it’s effortful.7 This means that if you want participants to reliably encode what they’ve learned into long-term memory and be able to reliably retrieve and use what they’ve learned in a variety of relevant contexts, you need to do things that both participants and educators find less appealing in real time. This includes spacing out the learning rather than massing it into a single block; interleaving new topics throughout rather than sticking to a single theme until participants have achieved mastery; and varying the conditions of practice. These techniques can make it significantly more challenging to run an LDP, but they’re crucial to supporting the ultimate goals: the sustained transfer of learning, and improved results.8

The truth is that if something is important enough to merit significant investment, it’s also likely to be complicated enough that efficacy must be prioritized over ease.

Instead of asking “Will participants be comfortable?” ask “Will participants learn, even if they’re uncomfortable?” Concerns for participants’ comfort seem to guide the design of far too many programs. It’s clear why this is the case; a program that doesn’t score well on immediate post-program satisfaction surveys might not be funded again. However, meta-analytic research evidence indicates that there is generally no significant relationship between liking an educational experience and learning from it, or between short-term satisfaction and the transfer of what has been learned.9 We know from both experience and the literature that growth comes through crucible experiences of trial and tribulation, not from being comfortable in the moment.

It’s established, for example, that development involves getting out of one’s comfort zone — facing rather than avoiding challenging experiences — and that higher-intensity practice (more physically or emotionally taxing, for instance) leads to more improvement (as long as it stops before the point of overload). We also know from psychology that avoiding or minimizing the duration or intensity of discomfort actually interferes with learning new patterns and replacing old ones.10 Put simply, you don’t overcome an aversion to public speaking or challenging interpersonal interactions by hiding from them; you do so by facing them in supportive learning environments. This is consistent with the core of cognitive behavioral therapy: To sustainably change your behavior, you have to surface, challenge, reinterpret, and replace unhelpful thoughts and feelings currently associated with difficult situations. That’s not easy or comfortable, but it is effective.

That’s why the company BOLD (Beyond Outdoor Leadership Development) seeks to advance traditional outdoor training by stretching participants beyond their comfort zones in challenging wilderness expeditions. In the pressure-cooker environment of a novel, high-stress context, participants are encouraged to experiment with new behaviors that will build or hone leadership capabilities. BOLD designs the experiences to create the deeper, more enduring well-being that comes from self-growth rather than the immediate but fleeting well-being associated directly with enjoying the experience itself.11 And, in fact, longer-term satisfaction scores often are very high as a result of the growth experienced.

Instead of asking “Will we change participants’ hearts and minds?” ask “Will we see changes in how participants behave?” Here’s another guiding belief we’re wary of: If you change people’s thinking, you’ll change their behavior. That sounds good but is problematic for at least two reasons: Changing behavior-guiding beliefs is very hard, and the link between attitudes and behavior, in general, just isn’t that strong. If this sounds counterintuitive, consider how often people’s belief that exercise is good for them, their desire to be healthy, and even their intention to work out 30 minutes a day don’t reliably result in the desired behavior — actually exercising.

It’s well documented that by adulthood, people vary considerably in how they see the world — how, for example, they prioritize different values and how strongly they endorse different beliefs. Adult attitudes are deeply ingrained and far too resistant to change for a short intervention to be likely to alter them.

Even if it were easy to change attitudes, decades of research suggest that there’s a low likelihood that this would translate to sustained behavior change. In general, attitudes don’t predict relevant behaviors very well; they become more reliably connected only when people consciously set up concrete behavioral commitments and accountability processes and do the hard work associated with forming new habits. Other research suggests that it’s useful to think about value change as a desired product of behavior change (not its cause) because our sensemaking about what we do can influence what we believe.12

Scholars of diversity, equity, and inclusion in organizations know all too well that leadership programs focused on DEI beliefs or attitudes can leave daily behaviors largely unchanged. Even programs that show clear positive effects on attitudes often have limited impact on behaviors. Whether this reflects the general challenges of connecting attitude change to behavioral change or another possibility — that people often espouse values that don’t reflect their actual beliefs, perhaps because they know what the socially accepted position is — the result is the same. Changing what people say they believe is seldom the ultimate vision of an LDP, and it’s often a poor proxy for how they subsequently behave. So why not measure what tends to matter more: what people do differently following a development program.

Instead of asking “How will we justify the spend?” ask “Will we spend only what we can justify?” Those who run operating units of any size are similar to venture investors and loan officers: They’re not interested in (re)investing in poorly justified opportunities, because their own outcomes depend on the return on what they put in. Therefore, make a solid case for the ROI of LDPs. Communications consultant Nancy Duarte suggests, for example, that if you believe pitch coaching is a valuable development area for salespeople, then your program should include a plan to track how the pitches that participants develop and practice during the program are subsequently used and whether they increase contract renewals or new sales.13 Or, if you’re hiring a partner to deliver the LDP, insist that it also be invested in its outcomes. Consider the example of Great Lakes Institute of Management, a consulting firm in India: It has a pay-for-performance fee structure wherein a percentage of its compensation is tied directly to noticeable post-program changes in participants.

Here’s a more provocative idea for those in leadership development: If you can’t make a compelling case for investment, don’t spend the money you were allocated. Producing underwhelming results with the budgeted amount doesn’t compel future investment; rather, it undermines it by reinforcing skeptics’ beliefs that the returns aren’t worth the expense. So if you know you can expect meaningful outcomes only with twice the allocation you have, consider developing a compelling explanation for why you’re going to run the program only every other year. Refusing to spend what you have might feel risky, but the courage to do so might be what it takes to inspire longer-term support for leadership development.

None of this means that the only useful investments are those that can be tied directly and immediately to financial outcomes. That’s a decidedly limited view of human development that ignores the myriad ways in which employee growth and well-being are also indirectly related to individual and organizational objectives. But that doesn’t obviate the need for the rigorous thinking and sound measurement that are central to good leadership development.

Partner With Program Developers for Better Results

Breaking the cycle of wasted resources and disappointing results from leadership development programs won’t come solely from more rigorous evaluation of programs by buyers. It’s unlikely to happen unless key stakeholders stop blaming each other after the fact and start working together constructively upfront. What’s required is a stronger partnership between the senior executives who sponsor leadership development and the HR personnel and/or program developers who select, manage, and deliver it. Neither side can align vision, method, and impact without the support of the other.

In a healthy partnership, both parties have a seat at the table, agree to acknowledge their responsibility for what is and what should be, and agree to make changes when things aren’t working. Leadership development personnel might, for example, push back harder on demands for convenient delivery schedules and reject a focus on program satisfaction scores. And they could commit to doing better at linking programming and its results to key organizational objectives. For their part, operating executives could stop complaining that development takes participants away from their “real jobs” for too long and start looking beyond short-term outcomes when evaluating programs. Likewise, senior leaders could stop linking LDP funding to short-term operating results — a common practice that makes it likely that more time will be spent adjusting programs to available resources than on ways to optimally and demonstrably meet participants’ needs. And leaders can support the transfer of what’s learned by positively recognizing and financially rewarding people when new behaviors are utilized on the job.

It’s long past time to expect more from leadership development programs. We know the questions to ask and the answers to expect. What’s needed most is the collective will of key decision makers to do what it takes.

References

- P. Vongswasdi, H. Leroy, J. Claeys, et al., “Beyond Developing Leaders: Toward a Multinarrative Understanding of the Value of Leadership Development Programs,” Academy of Management Learning & Education (forthcoming), published online June 12, 2023.

- H. Leroy, M. Anisman-Razin, B.J. Avolio, et al., “Walking Our Evidence-Based Talk: The Case of Leadership Development in Business Schools,” Journal of Leadership & Organizational Studies 29, no. 1 (February 2022): 5-32.

- C.N. Lacerenza, D.L. Reyes, S.L. Marlow, et al., “Leadership Training Design, Delivery, and Implementation: A Meta-Analysis,” Journal of Applied Psychology 102, no. 12 (December 2017): 1686-1718; H. Aguinis and K. Kraiger, “Benefits of Training and Development for Individuals and Teams, Organizations, and Society,” Annual Review of Psychology 60 (2009): 451-474; and D.S. DeRue and C.G. Myers, “Leadership Development: A Review and Agenda for Future Research,” ch. 37 in “The Oxford Handbook of Leadership and Organizations,” ed. D.V. Day (Oxford, England: Oxford University Press, 2014).

- R.A. Noe, “Trainees’ Attributes and Attitudes: Neglected Influences on Training Effectiveness,” Academy of Management Review 11, no. 4 (October 1986): 736-749; and C.M. Axtell, S. Maitlis, and S.K. Yearta, “Predicting Immediate and Longer-Term Transfer of Training,” Personnel Review 26, no. 3 (June 1997): 201-213.

- V. Burke and D. Collins, “Optimising the Effects of Leadership Development Programmes: A Framework for Analysing the Learning and Transfer of Leadership Skills,” Management Decision 43, no. 7/8 (August 2005): 975-987.

- L. Dragoni, P.E. Tesluk, J.E.A. Russell, et al., “Understanding Managerial Development: Integrating Developmental Assignments, Learning Orientation, and Access to Developmental Opportunities in Predicting Managerial Competencies,” Academy of Management Journal 52, no. 4 (August 2009): 731-743.

- P.C. Brown, H.L. Roediger III, and M.A. McDaniel, “Make It Stick: The Science of Successful Learning” (Cambridge, Massachusetts: Harvard University Press, 2014).

- Lacerenza et al., “Leadership Training Design, Delivery, and Implementation,” 1686-1718.

- G.M. Alliger, S.I. Tannenbaum, W. Bennett Jr., et al., “A Meta-Analysis of the Relations Among Training Criteria,” Personnel Psychology 50, no. 2 (June 1997): 341-358; M. Gessler, “The Correlation of Participant Satisfaction, Learning Success, and Learning Transfer: An Empirical Investigation of Correlation Assumptions in Kirkpatrick’s Four-Level Model,” International Journal of Management in Education 3, no. 3-4 (June 2009): 346-358; and W. Arthur Jr., W. Bennett Jr., P.S. Edens, et al., “Effectiveness of Training in Organizations: A Meta-Analysis of Design and Evaluation Features,” Journal of Applied Psychology 88, no. 2 (April 2003): 234-245.

- S.M. Blakey and J.S. Abramowitz, “The Effects of Safety Behaviors During Exposure Therapy for Anxiety: Critical Analysis From an Inhibitory Learning Perspective,” Clinical Psychology Review 49 (November 2016): 1-15.

- R.M. Ryan and E.L. Deci, “On Happiness and Human Potentials: A Review of Research on Hedonic and Eudaimonic Well-Being,” Annual Review of Psychology 52 (2001): 141-166.

- R. Fischer, “From Values to Behavior and From Behavior to Values,” ch. 10 in “Values and Behavior: Taking a Cross Cultural Perspective,” ed. S. Roccas and L. Sagiv (Cham, Switzerland: Springer, 2017).

- N. Duarte, “Make Your Case for Communication Upskilling,” MIT Sloan Management Review, Aug. 3, 2023, https://sloanreview.mit.edu.